The O’Reilly Artificial Intelligence conference was held in midtown New York on September 26 and 27. The following are a number of themes and observations that I saw emerging from the presentations, keynotes, and side conversations. My objectives were to:

- Sense check my understanding of the state of the industry and its maturity

- Uncover the emergent trends and new forms of thinking

- Identify some key players and technologies

- Extrapolate a sense of where this is headed in the short-to-medium term

- Find bridges between leadership strategy and implementation methods that we could apply

Keynotes and presentations were made by members of Baidu, Facebook, Google, IBM, Intel, McKinsey & Co, Microsoft, MIT, NVIDIA, O’Reilly, Stanford, TensorFlow, and from other significant startups, academia and NGOs.

My top line notes

- What the AI community is actually about right now is Human Centred Design, Organisation Design, and supplying the disruptive tools and methods for the digital transformation of industries.

- Bots are exploding in popularity because we’re getting the NLP and behavioural process modelling right. i.e. this is a type of ‘AI’ we know how to do, and it feels like AI.

- Because Deep Learning is the most AI-like technology we have, and because its results are so effective, there is a concern that too much love for it will cause it to top out soon. New ideas are needed.

1. Definitions of AI

Questions around what AI actually is, are still in the air. Strong AI has made almost no progress, and yet there is a huge growth of interest and hype. One presenter noted that what AI does is ‘Magic’ to non-practitioners. To those who are doing the work it is mostly formalised algorithms or hierarchical feature detection (Deep Learning). The most telling definition was that ‘AI is what computers cannot do yet’. This is all a step change rather than something entirely new.

So, Artificial Intelligence does not really exist yet, and what we are all doing is much better Machine Learning. Chess playing computers are no longer ‘AI’ – its a specialist algorithm. Things feel like AI when the interface becomes invisible, such as via a voice interface, but these are algorithms with behavioural modelling and a few feedback loops to knowledge services – such as voice recognition and a repository of answers. We can look to Nick Bostrom for a discussion on real AI and its time horizon. For the moment it seems that AI can be used interchangeably with Machine Learning, and mostly for when a service feels a bit human-like.

2. User Experience and NLP

User Experience thinking has entered the data and services layer of Machine Learning, making behavioural data modelling and Human Centred Design core to the practitioners of AI. Modelling processes is not new. Call centres and outsourcing have being doing this for a long time. But for machines to be more independent from human judgement, needing less humans in the loop for basic tasks, the decision and cognition processes have to be mapped. This is most obvious with Natural Language Processing, where the algorithm contains structure that’s called Intent, Entities, Actions, Dialogue.

I expect the AI industry to further embrace insights from the arts, design, and cognitive science to help them model behaviour into algorithms. I saw one talk on conversational interfaces that exemplified this. The features and functionality of the conversational model they put forward was Ethics, Personality, Episodic memory, Emotion, Social Learning, Topics/Content, Facts/Transactions. It was noted that playwrites were being hired to help supply Deep Learning training data for the Personality and Emotion features.

3. Automation of Knowledge work

Task-based knowledge work is considered the next human activity to be automated. Business model disruption is expected to reduce waste, create surplus, optimise asset utilisation, and improve imperfect processes. All of this affects humans who are deemed a knowledge bottleneck, needing better decision making quality and consistency, with too little ability to digest/analyze data/content fast enough, and are too ‘expensive’.

A lot of ‘AI’ companies are actually just automating processes and attaching knowledge services to key process steps. An example is x.ai who provide a calendaring service. Natural Language Processing extracts the entities from email text (including times and locations), maps a classification of Intent against a classification of answers to return Dialogue, and uses a mapping service for geolocation. This is all algorithm and process modelling.

This is a revolution in business process improvement. I saw presentations that included scenarios for how humans will be augmented by machines, and the phrase “Work Design, for smart humans and smart machines” with new job roles for organisations to deal with AI change management.

4. Simulating the world

There was little discussion on this, but I suspect it will become a growth area. ‘Knowing the world’ and the things within it is a prerequisite of being able to scale the capabilities of conversational interfaces and image recognition, particularly when connecting to the Internet of Things. Companies like Google already have a mapping of the world in their Knowledge Vault, and are already using probabilistic feature detection to find new entities. Graph databases and Ontology development were present but under-represented. Graphs are at the heart of Neural Networks and Deep Learning, but the Ontology side of their capability is yet to come.

One session discussed Probabilistic Programming Languages. It is a new angle on some existing probability techniques. Using video as an example it was able to predict ‘what happens next’ and make up new video frames – of a part of a room it was never shown, or a view of a face it had never seen. The face was exact, and the unseen room was blurry but surprising. This uses an Ontology attached to the coding to assist with prediction and inference. And one of the stated aims is getting humans and machines to ‘speak the same language’. Lots of potential for forecasting and scenario development.

5. Technology and Global Issues

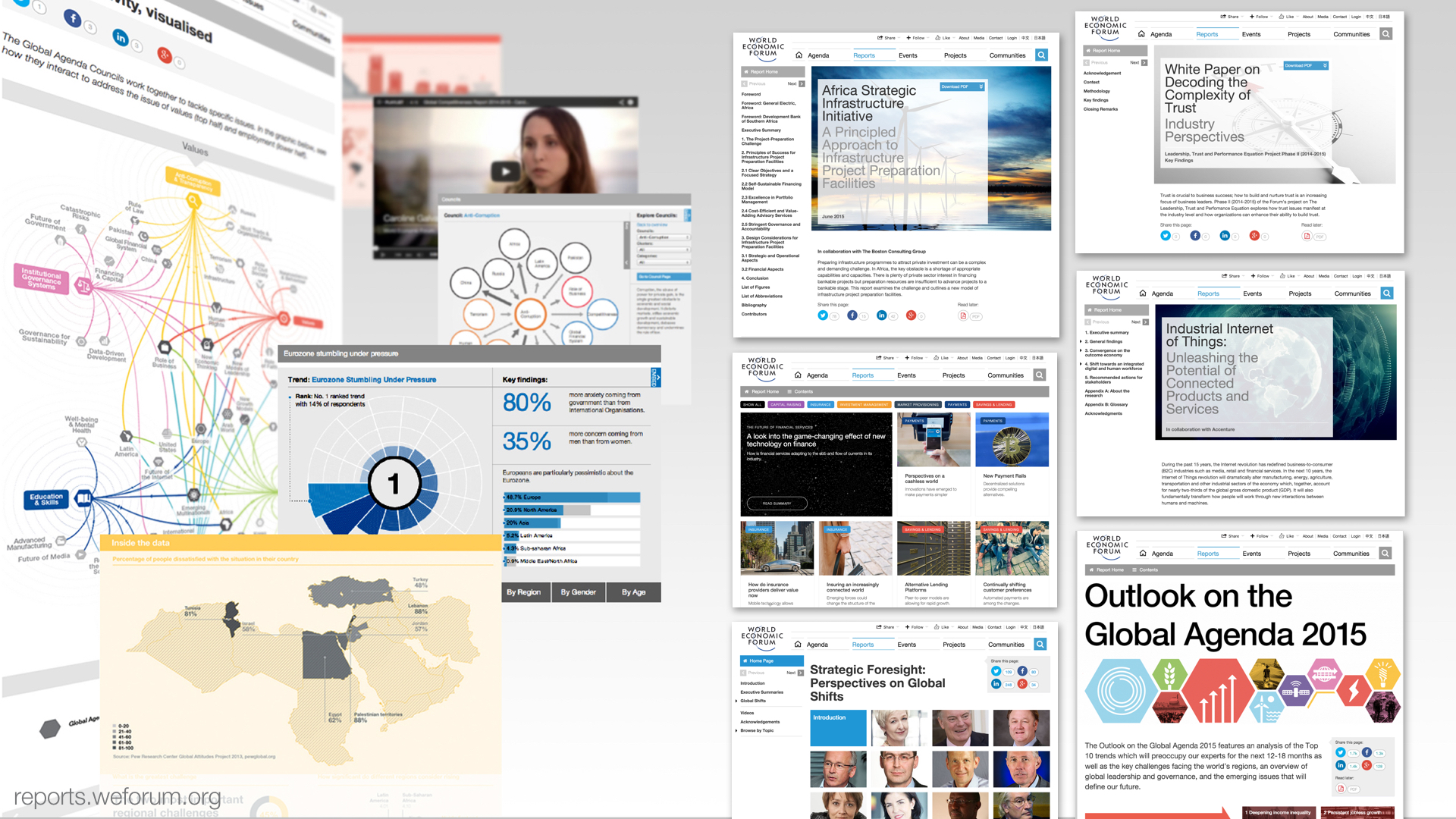

It’s also interesting to see so many global issues being discussed. The future of jobs, noting which jobs are most vulnerable, the digital transformation of industry, how to use Deep Learning to inform decision makers about medical or aid effectiveness. It is also interesting to see O’Reilly, the conference organiser, moving towards global issues from their starting point as a technology publisher, whilst the World Economic Forum is moving towards enabling technologies in the opposite direction. There is a huge overlap between The Forum’s Fourth Industrial Revolution theme and O’Reilly’s Next:Economy theme. This is more than Tim O’Reilly’s championing of Open Data. It’s baked into the ambition and ideas of what AI can achieve, and no doubt into a stack of science fiction that the practitioners have absorbed.

6. Algorithms and Training Data

A key Machine Learning method is to build algorithms that model processes and connect services into an API. In many respects this is process documentation for automation. Another is Deep Learning, with its hierarchical feature detection, which is pattern finding (e.g. image recognition) based on Training Data. Its algorithms are learning routines and subroutines across a deep graph that represents the subject it is analysing.

One talk discussed this by saying it was not an either/or – the premiss being that the more training data you have, the less you need algorithm development. Instead, through Pedagogical Programming, the two can be combined for the benefit of both. The algorithm gives focus to the Deep Learning to accelerate its learning process. This was described in terms of Concepts, Curricula, and Training Sources. The analogy was how people naturally learn. The Concepts are the things to be learnt, the Curricula are the learning steps to incrementally learn a subject or task (hitting a ball, riding a bicycle), and the Training Sources are the available knowledge corpus’. Letting a machine figure everything out for itself rather than nudging it with incremental steps does seem like an obvious and valuable method. For me, this is a human centred design solution with an Ontology. And it aligns with the distinctions between Reinforced, Supervised, and Unsupervised learning.

Some further insights

- 99% of Internet of Things data is currently unused. This is an untapped resource for Deep Learning.

- The tech giants are mainly focussed on consumer not enterprise products

- AI engineers are a novelty in the enterprise and mostly viewed as aliens

- Agile programming doesn’t work for Machine Learning product development

- Scaling biases from algorithm design is a known but not fully addressed problem

- Search is becoming one part of a Findability and Conversational Interface technology stack.

- A Conversational Software Taskforce is being established by Betaworks

- Startups need to focus on generalised features for their products to scale. Yet this is perceived as an AI product gap because enterprises need more bespoke services