To think that the new Apple AirPods are just the latest iteration of headphones misses the point. As does the idea that they are about liberation from tangled white cords. Their significance is far greater than that. These are the first signs of the Fourth Industrial Revolution, an age when computing will become an extension of the individual and part of everything we do.

This is about the beginning of mass adoption of new behaviours for communicating with computers. Ambient computing and invisible interfaces are taking hold, and changing our relationship with technology.

We are experiencing a step change rather than something new. Bluetooth headphones are already readily available, but what is different here is the AirPod’s integration with artificial-intelligence-driven assistance equipped with natural language voice recognition – Siri.

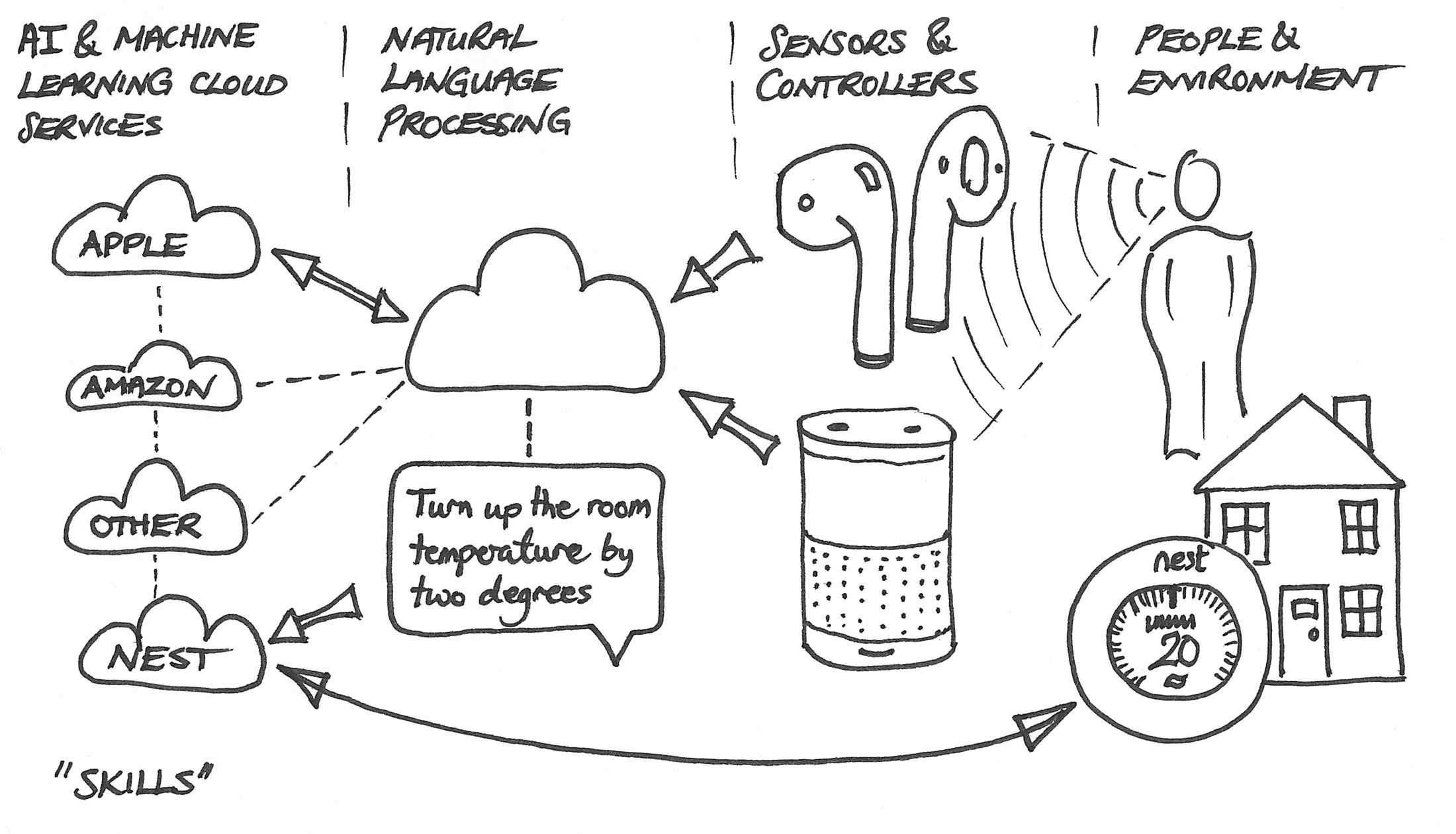

Other voice-activated services such as Google Home, Microsoft Cortana, Amazon Echo and IBM Watson Conversation are all playing on this field. What is changing is that these companies are starting to get the experience design right, from the hardware that accurately hears the voice input, to the artificial intelligence cloud services that understand the intent, to the quality and relevance of the actions or answers performed in response. There is a lot that can go wrong between a voice command and an artificially intelligent answer, but each link in the chain is improving quickly.

The AirPods are filled with miniaturized sensors and features. They detect when you’re talking and use beam-forming microphones to filter noise, to get a clear voice signal to the cloud, so it can hear exactly what you’re asking for. This is a crucial first step in voice interface usability. And Apple have introduced a new gesture (or “haptic”, as they are known) to the human-computer relationship. We’ve seen gestural relationships before. Point, Click, Drag came with the mass adoption of personal computers, the graphical user interface and the mouse. Tap and Swipe ushered in the smartphone era and ever-present computing into our lives. This new “tap tap” is the sound of an invisible screen-less interface being activated.

Like Amazon’s Echo, a device that sits in the home, waiting to seamlessly deliver cloud services following voice commands, this is the art of product design. The right human-centred design solution removes barriers to usage, and the result is that it begins to feel natural, ambient and available. At what point will it feel like the headphone is doing the work, when your mobile phone rarely comes out of your pocket?

Natural Language Processing in the cloud is a gateway technology to the use of other interconnected machine-learning services. It is also a form of AI that big tech companies have started to get right, thanks to better analysis of bigger data sets, and algorithms that understand language. It explains the recent explosion in text based chatbots that are being integrated into conversational interfaces, from Facebook Messenger to WeChat, Telegram and Skype. Expect to see a lot more of them soon.

By talking to Amazon’s Echo, you can book an Uber cab, arrange for your dry cleaning to be picked up, ask Nest to change the room temperature, or pay your energy bill. These are all things you could previously do by sitting at your computer and pulling up a website. Now they are a voice command away. More human. More accessible. More of a “service”. More ambient.

For the Amazon Echo, these abilities to collaborate with other companies’ services are being called Skills, a very AI-centric name. And this technology has the potential to tighten business relationships. So it’s understandable that Google, Facebook, Amazon, IBM and Microsoft have announced a mutual Partnership on Artificial Intelligence to Benefit People and Society. Collaboration is the new competition.

This fast-moving area of business and industry activity has been underpinned by significant investments in artificial intelligence over the past few years. IBM are pumping over a billion dollars into their Watson division. And Watson now includes conversational technologies to help other businesses to build their own voice and chat interfaces into their products and customer experiences. Apple, an early adopter that first launched Siri in 2012, upgraded to a neural net in 2014 to make Siri’s voice sound more natural, and thereby drive product uptake through a more human-feeling experience.

Where is all of this headed? First, it is headed through a hype cycle. And then it is headed towards an environment that fluidly senses you, hears you, knows your preferences, and responds. This is one of the many promises of the “internet of things”. The AirPods are not headphones; they are a new kind of device within the ecosystem of ambient computing. Tap tap.